Meta-analysis is often hailed as the gold standard in evidence synthesis. Positioned at the top of the evidence hierarchy, it is seen as a reliable guide for research, policy, and practice.

But here’s the reality: a meta-analysis is only as strong as the studies it includes and the methods it applies. Without rigor, the process risks producing garbage conclusions from garbage aggregation.

This article explores critical pitfalls in meta-analysis and provides strategies to ensure meaningful, trustworthy results.

Critical Issues Researchers Overlook

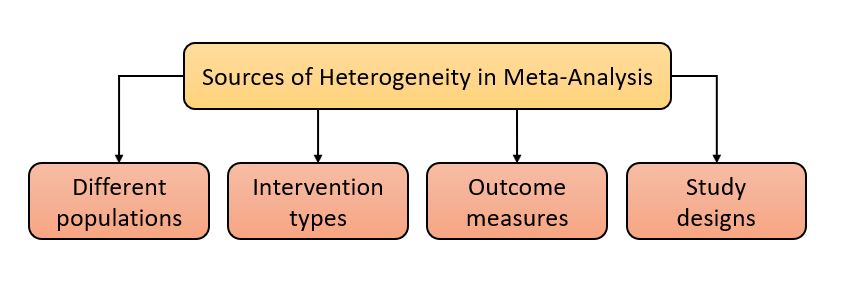

1. Heterogeneity Ignored

Not all studies are created equal. Pooling research from different contexts, populations, or interventions inflates false “average effects.”

- Why it matters: Heterogeneity without adjustment masks real variation.

- Best practice: Use subgroup analysis, moderator testing, and sensitivity analysis to separate signals from noise.

Figure: Sources of heterogeneity in meta-analysis, Source: Higgins & Green, 2011, Cochrane Handbook

2. Publication Bias

Studies with positive or statistically significant results are more likely to be published. Relying only on published work systematically skews evidence.

- Risk: Inflated effect sizes misguide policy and practice.

- Solution: Tools such as funnel plots, Egger’s test, and trim-and-fill adjust for this bias.

3. Low-Quality Studies Included

A weak design does not become credible just by aggregation. Low-quality studies contaminate the evidence base.

- Tools: GRADE, Cochrane risk-of-bias, and AMSTAR 2 ensure rigorous screening.

- Outcome: Better validity, more reliable conclusions.

4. Statistical Missteps

Meta-analysis depends on correct statistical choices. Wrong effect size metrics or weighting distort outcomes.

- Odds ratios vs. mean differences matter.

- Choosing between fixed vs. random effects is not neutral—it’s methodological.

Key point: Poor statistical practice = false evidence.

Why This Matters

- False Evidence → False Policy: Governments and NGOs risk adopting ineffective programs.

- Damaged Credibility: Research collapses under scrutiny, eroding trust.

- Lost Learning: Instead of clarity, poor meta-analyses muddy knowledge.

Meta-analysis without rigor is not evidence—it is noise disguised as certainty.

Best Practices at Research & Report Consulting

At Research & Report Consulting, we strengthen meta-analyses by:

- Addressing heterogeneity with subgroup and moderator analyses

- Correcting publication bias with robust diagnostics

- Screening rigorously for study quality

- Ensuring statistical integrity with correct models

- Reporting transparently with inclusion criteria and limitations

Final Thought

Meta-analysis is not about averaging. It is about discerning patterns in complexity. With rigor, it guides scholarship and policy. Without it, it misleads.

What do you think—are meta-analyses in your field applied rigorously, or are shortcuts common? Share your experience below!