Qualitative research depends on trustworthiness, not statistical generalization. When researchers omit key components of an audit trail, reviewers cannot see who decided what, when, and why. As a result, credibility, dependability, and confirmability weaken—even when the dataset is rich and quotes appear compelling.

A rigorous audit trail acts as the study’s backbone. It links raw data, analytic decisions, interpretation, and reporting. Without it, readers cannot verify logic, reviewers cannot assess consistency, and editors cannot judge methodological integrity.

Common Audit Trail Blind Spots (Most Teams Miss These)

These recurring gaps appear across dissertations, journal submissions, NGO evaluations, and consultancy reports:

1. No Decision Log for Sampling Shifts

Researchers often change sampling mid-study—yet fail to record:

- Why the shift happened

- Who approved it

- What criteria changed

- Whether stopping rules were applied

This omission breaks traceability and raises concerns about bias or convenience sampling.

2. Codebook Changes Without Changelog

Qualitative coding is iterative. Yet many teams:

- Modify category definitions

- Merge overlapping codes

- Rename parent/child nodes

- Reassign segments

These shifts need a changelog and adjudication record to prove analytic discipline.

3. Missing Reflexivity Documentation

Reflexivity links researcher stance to interpretation. Without it:

- Positionality influences remain invisible

- Interpretation risks appear uncontrolled

- Reviewer confidence drops

Reflexive documentation is a core COREQ and SRQR requirement.

4. Unclear Quote Provenance

Many manuscripts present quotations without:

- Source ID

- Demographic metadata

- Contextual notes

- Coding history

Without provenance, triangulation and auditability collapse.

5. Version Control Chaos

Untracked files, overwritten transcripts, and uncontrolled codebook iterations create analytic inconsistencies. This leads to:

- Unreproducible analysis

- Weak chain of evidence

- Reporting inconsistencies

Researchers must use structured version control systems.

Fast Fixes Editors and Reviewers Value

These interventions immediately strengthen transparency and traceability:

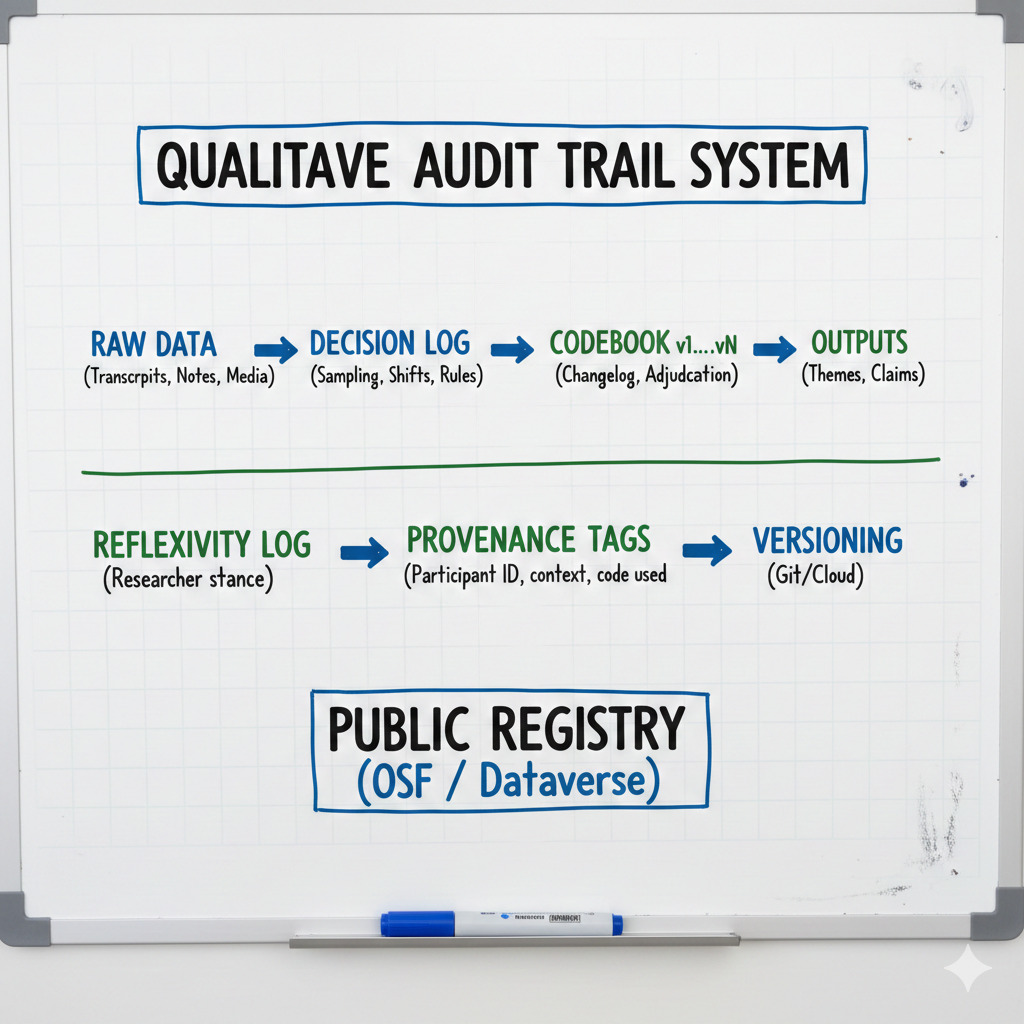

1. Start With a Living Decision Log

Begin on day one. Update continuously.

- Sampling decisions

- Inclusion/exclusion criteria

- Analytic shifts

- Researcher memos

A living log shows methodological discipline.

2. Maintain a Codebook Changelog with Adjudication Register

Every update should track:

- Code definition updates

- Node merges/splits

- Inter-coder disagreements

- Final adjudication decisions

Use Git, Notion, or Google Docs version control to keep everything traceable.

3. Add Provenance Tags to All Excerpts

Each quotation should record:

- Participant ID

- Data source

- Collection date

- Code version used

This yields a clear chain of evidence.

4. Register Materials in OSF or Dataverse

Transparency increases acceptance likelihood. Register:

- Codebooks

- De-identified excerpts

- Audit logs

- Reflexive journals

- Protocols

5. Report Against COREQ/SRQR

These frameworks are widely recognized by top journals. Reporting against them signals credibility and methodological rigor.

The Business Case for a Strong Audit Trail

Organizations increasingly rely on qualitative research for policy, product design, and impact evaluation. Poor audit trails increase:

- Reviewer rejection rates

- Audit risks in development projects

- Reproducibility challenges

- Legal exposure in regulated sectors

A strong audit trail protects credibility, institutional memory, and organizational reputation.

Service Mention (Neutral, Non-Promotional)

A structured audit-trail framework—templates, workflows, chain-of-evidence tables, and registries—helps teams produce traceable, review-ready qualitative studies.

References

- Lincoln, Y. S., & Guba, E. G. (1985). Naturalistic Inquiry.

- O’Brien, B. C., et al. (2014). “Standards for Reporting Qualitative Research (SRQR).”

- Tong, A., Sainsbury, P., & Craig, J. (2007). “Consolidated Criteria for Reporting Qualitative Research (COREQ).”

- OSF Registries (Open Science Framework).

Want research service from Research & Report experts? Please get in touch with us.